Scraping Airbnb website with Python, Beautiful Soup and Selenium

This article is a part of an Educational Data Science Project — Airbnb Analytics

The idea is to implement an example Data Science project using the Airbnb website as a source of data. Please read the stories here:

Part 0 - Intro to the project

Part 1 - Scrape the data from Airbnb website (this article)

Part 2 - More details to Web Scraping

Part 3 - Explore and clean the data set

Part 4 - Build a machine learning model for listing price prediction

Part 5 - Explore the results and apply the modelKudos to Airbnb

Most of us know this service, which allows renting private accommodations at affordable prices. An idea of being a marketplace in the traveling domain plus magnificent implementation led Airbnb to a successful IPO at the end of 2020 [1].

The company also plays an important role in the IT community being an advocate for open-source projects [2], the most well-known of which is Airflow.

Every listing presented on the website has very detailed information: from the type of Wi-Fi to the list of cooking utensils. Unfortunately, Airbnb doesn’t provide any public API, so for our small educational project we will use a workaround — web scraping.

Getting Started

We will use Python as a programming language because it is perfect for prototyping, has an extensive online community and is my go-to language😉. Moreover, there are plenty of libraries for basically everything one could need. Two of them will be our main tools today:

- Beautiful Soup — Allows easy extraction of data from HTML documents

- Selenium — A multi-purpose tool for automating web-browser actions

I work on Windows and to make life a bit easier tend to use WSL as much as possible. Usually installing Python packages is quite easy for me — I just run pip/conda install package. With Selenium it was slightly more complicated, but hopefully it won’t take a lot of your time. Here you can find a very nice guide for setting up Selenium on WSL.

Planning the scraping

Let’s look at a typical user journey. A visitor enters the destination, desired dates and clicks a search button. Airbnb ranking engine then outputs some results for the user to choose from. That is a search page — multiple listings at once and only brief info on each of them.

After browsing for a while our visitor clicks on a listing and gets redirected to a detail page where they can find various information about selected accommodation.

We are interested in scraping all useful information, that’s why we’ll be processing both types of pages: search and detail. Besides, we have to think of the listings that are hidden deep below the top search page. There are 20 results per typical search page, and up to 15 pages per destination (Airbnb restricts further access).

Looks pretty simple. We have to implement 2 major parts of the program: (1) scanning a search page and (2) extracting data from a detail page. Let’s write some code!

Accessing the listings

Scraping webpages is extremely simple with Python. Here is the function which extracts HTML and puts it into a Beautiful Soup object:

Beautiful Soup allows us to easily navigate through an HTML tree and access it’s elements. For example, obtaining the text from a “div” object with a class “foobar” is as easy as:

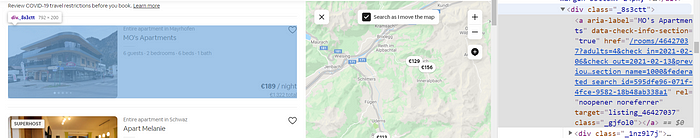

text = soup.find("div", {"class": "foobar"}).get_text()On the Airbnb search page the objects of our interest are individual listings. To access them we have to define their tag types and class names. The easiest way to do that is to inspect the page with a Chrome developer tool (press F12)

The listing is encapsulated in a “div” object with a class “_8s3ctt”. Additionally, we know that one search page contains 20 separate listings. We can grab all of them at once using Beautiful Soup method findAll:

A small downside of scraping: the aforementioned class name is only temporary, as Airbnb could change it in a minute with any upcoming update.

Now let’s finally get to the point of the whole post — data extraction.

Extracting listing basic features

From the detail pages we can collect high-level information of the listings, like name, total price, average rating and some others.

All these features are located in different HTML objects with different classes. That means we could write multiple single extractions — one per feature:

name = soup.find('div', {'class':'_hxt6u1e'}).get('aria-label')

price = soup.find('span', {'class':'_1p7iugi'}).get_text()

...But I decided to over-engineer things right from the start of the project and write a unified extraction function, which is re-usable for accessing different elements on the page.

Now we have everything we need for processing the whole page with listings and extracting basic features from every single one of them. Here is an example for extracting only 2 features, but in a git repo you can find the full code.

Accessing all pages per location

The more the better! Especially when we’re talking about the data. Airbnb gives us access to up to 300 listings per location and we’re gonna scrape them all. There are multiple ways to paginate search results. I think the easiest is to observe how the URL changes while we’re clicking on “next page” and then mock it in our script.

Just adding a parameter “items_offset” to our initial URL will do the job. Let’s build a list with all links per location.

Half of the work is done here. We can know run our parser and get the basic features for all listings within a location. All we have to input is the starting link. Now it’ll get more interesting.

Dynamic pages

If you watched a “user journey GIF” above, you might have noticed that loading a detail page takes some time. Quite some time actually, around 3–4 seconds. Until then we could access only the base HTML of the web page, which doesn’t contain all of the listing features we wanna scrape.

Unfortunately, requests package doesn’t allow us to wait until all page elements are loaded. But Selenium does. It can mock a real user behavior: wait for loading all the javascript, scroll, click on the buttons, fill the forms and much more.

Waiting and clicking is what we’re gonna do now. To access the amenities and price details we have to click on corresponding elements.

To wrap it up our actions now are:

- Initialize Selenium driver

- Open a detail page

- Wait till the buttons are loaded

- Click on the buttons

- Wait for a bit till all the elements are loaded

- Extract HTML code

Let’s put them into a Python function.

Extracting detail listing data is now a matter of some time only, as all the necessary ingredients are at our disposal. We have to thoroughly inspect the web page with a Chrome developer tool, write down all the names and classes of HTML elements, feed all of that to extract_element_data.py and be happy with the results.

But…

Parallel execution

Scraping all 15 search pages per location is quite fast, as we don’t have to wait for Javascript elements to be loaded. That means after several seconds we’ll have the dataset with basic features for all listings. Detail page URLs are among them.

We know that processing of one detail page takes at least 5–6 seconds (which we have to wait till the page renders) + some time for the script to compute. At the same time, I observed that this whole process utilizes the CPU of my laptop only at ~3–8% level. Let’s make it work harder!

Instead of visiting 300 webpages in turn in one huge loop, we can split the URLs into batches and loop over these batches. To define the optimal batch size we have to experiment a bit. After some trials, I chose a size of 8.

from multiprocessing import Pool

with Pool(8) as pool:

result = pool.map(scrape_detail_page, url_list)If I tried to run more processes at once, it slowed down my machine drastically. The Chrome browser needed more than 5 seconds to load all the elements on the page and hence the whole scraping was blocked or even failed.

Here I should mention that I’m not a computer scientist and have only basic understanding of parallelization. If you want to learn it better, start from this article.

Results

After wrapping our functions into a nice little program and running it over a destination (I was experimenting with Mayrhofen) we got our raw dataset. Congratulations!

Here is a link to the actual file if you’d want to explore it.

The downside of dealing with real-world data is that it’s not perfect. There are “empty” columns, many fields require cleaning and preprocessing. Some features turned out to be useless, as they are either always empty or populated with all the same values.

We could also improve the script in some aspects. Playing around with different parallelization approaches might make it faster. Exploring loading times of the pages will result in fewer empty columns.

Recap

We’ve done quite some work. Thank you for reading to the end. Now it’s time to compile a brag list. We’ve learned how to:

- scrape webpages with Python and Beautiful Soup

- deal with dynamic pages using Selenium

- parallelize the script with multiprocessing

The full script and a data sample could be found on Github:

Feel free to watch our webinar where we discussed the first part of this article — Web scraping Airbnb search pages with Python and Beautiful Soup:

We’ve just started, feel free to join us on: